SVG-T2I

Scaling up Text-to-Image Latent Diffusion Models without Variational Autoencoders

Minglei Shi1*, Haolin Wang1*, Borui Zhang1, Wenzhao Zheng1, Bohan Zeng2 Ziyang Yuan2†, Xiaoshi Wu2, Yuanxing Zhang2, Huan Yang2 Xintao Wang2, Pengfei Wan2, Kun Gai2, Jie Zhou1, Jiwen Lu1†

1Tsinghua University 2KlingTeam, Kuaishou Technology

* Equal contribution † Corresponding author

Important Note: This repository implements SVG-T2I, a text-to-image diffusion framework that performs visual generation directly in Visual Foundation Model (VFM) representation space, rather than pixel space or vae space.

📰 News

- [2025-12-13] 📢✨ We are excited to announce the official release of SVG-T2I, including pre-trained checkpoints as well as complete training and inference code.

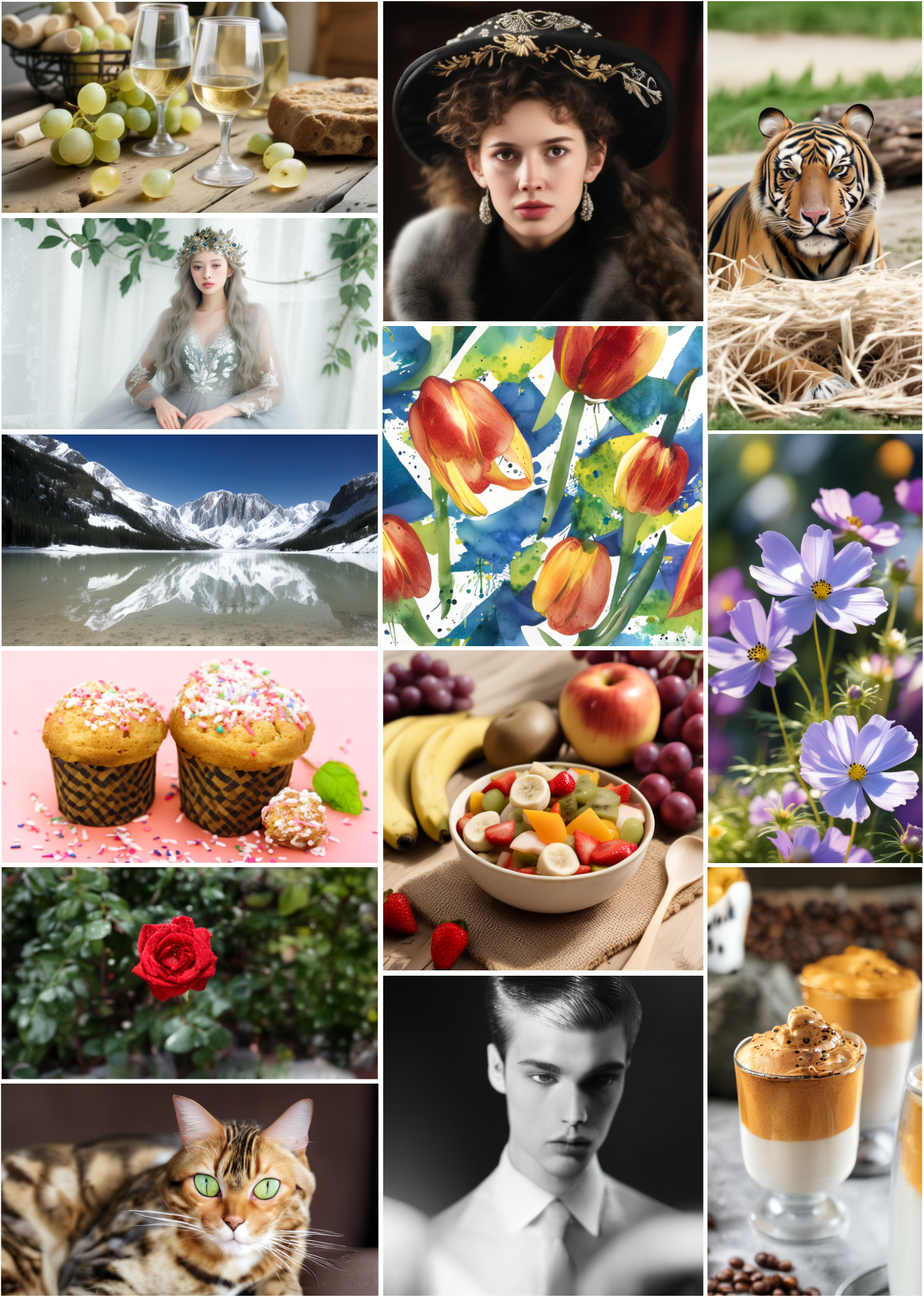

🖼️ Gallery

High-fidelity samples generated by SVG-T2I.

🧠 Overview

Visual generation grounded in Visual Foundation Model (VFM) representations offers a promising unified approach to visual understanding and generation. However, large-scale text-to-image diffusion models operating directly in VFM feature space remain underexplored.

To address this, SVG-T2I extends the SVG framework to enable high-quality text-to-image synthesis directly in the VFM domain using a standard diffusion pipeline. The model achieves competitive performance, reaching 0.75 on GenEval and 85.78 on DPG-Bench, demonstrating the strong generative capability of VFM representations.

We fully open-source the autoencoder and generation models, along with their training, inference, and evaluation pipelines, to support future research in representation-driven visual generation.

Why SVG-T2I?

✨ Direct Use of VFM Representations:

SVG-T2I performs generation directly in the feature space of Visual Foundation Models (e.g., DINOv3), rather than aligning it. This preserves rich semantic structure learned from large-scale self-supervised visual representation learning.🔗 Unified Representation for Understanding and Generation:

By sharing the same VFM representation space across visual understanding, perception, and generation, SVG-T2I unlocks strong potential for downstream tasks such as image editing, retrieval, reasoning, and multimodal alignment.🧩 Fully Open-Sourced Pipeline:

We fully open-source the entire training and inference pipeline, including the SVG autoencoder, diffusion model, evaluation code, and pretrained checkpoints, to facilitate reproducibility and future research in representation-driven visual generation.

🌟 Key Components

| Component | Description |

|---|---|

| 1. SVG Autoencoder | A novel latent codec consisting of a Frozen VFM (DINOv3/DINOv2/SIGLIP2/MAE) encoder, an optional residual reconstruction branch, and a trainable convolutional decoder. ❌ No Quantization ❌ No KL-loss ❌ No Gaussian Assumption |

| 2. Latent Diffusion | A Single-stream Diffusion Transformer trained directly on representation space. Supports progressive training (256→512→1024) and is optimized on large-scale text-image pairs. |

🎮 Model Zoo

SVG Autoencoder

| Model | Notes | Resol. | Encoder (Params) | Download URL |

|---|---|---|---|---|

| Autoencoder-P | Stage1 (Low-resol) | 256 | DINOv3s16p (29M) | Hugging Face |

| Autoencoder-P | Stage2 (Middle-resol) | 512 | DINOv3s16p (29M) | Hugging Face |

| Autoencoder-P | Stage3 (High-resol) (😄 Default) | 1024 | DINOv3s16p (29M) | Hugging Face |

| Autoencoder-R | Stage1 (Low-resol) | 256 | DINOv3s16p + Residual ViT (29M + 22M) | Hugging Face |

| Autoencoder-R | Stage2 (Middle-resol) | 512 | DINOv3s16p + Residual ViT (29M + 22M) | Hugging Face |

| Autoencoder-R | Stage3 (High-resol) | 1024 | DINOv3s16p + Residual ViT (29M + 22M) | Hugging Face |

SVG-T2I DiT

| Notes | Resol. | Parameter | Text Encoder | Representation Encoder | Download URL |

|---|---|---|---|---|---|

| Stage1 (Low-resol) | 256 | 2.6B | Gemma-2-2B | DINOv3s16p | Hugging Face |

| Stage2 (Middle-resol) | 512 | 2.6B | Gemma-2-2B | DINOv3s16p | Hugging Face |

| Stage3 (High-resol) | 1024 | 2.6B | Gemma-2-2B | DINOv3s16p | Hugging Face |

| Stage4 (SFT)(😄Default) | 1024 | 2.6B | Gemma-2-2B | DINOv3s16p | Hugging Face |

Model Name: TxxxM indicates the total number of images the model has cumulatively seen during training.

🛠️ Installation

1. Environment Setup

conda create -n svg_t2i python=3.10 -y

conda activate svg_t2i

pip install -r requirements.txt

2. Download DINOv3

SVG-T2I relies on DINOv3 as the frozen encoder.

# Download DINOv3 pretrained weights and update your config paths

git clone https://github.com/facebookresearch/dinov3.git

2. Download Pre-trained Models

You can download all stage-wise pretrained models and checkpoints from our official Hugging Face repository, including the SVG autoencoder and SVG-T2I diffusion models used for training and evaluation:

https://huggingface.co/KlingTeam/SVG-T2I

These pretrained weights are released to support academic research, benchmarking, and a wide range of downstream applications, and can be freely used for experimentation, analysis, and further development.

📦 Data Preparation

1. Autoencoder Training Data

Any large-scale image dataset works (e.g., ImageNet-1K). Update autoencoder/pure/configs/*.yaml:

For ImageNet-1K

data:

target: "utils.data_module_allinone.DataModuleFromConfig"

params:

batch_size: 64

wrap: true

num_workers: 16

train:

target: ldm.data.imagenet.ImageNetTrain

params:

data_root: Your ImageNet Path

size: 256

validation:

target: ldm.data.imagenet.ImageNetValidation

params:

data_root: Your ImageNet Path

size: 256

For customized Dataset

We support customized JSONL formats. Example file in configs/example.jsonl

(prompt only used in Generation Task).

Example JSONL Format:

{"path": "test/man.jpg", "prompt": "A man"}

{"path": "test/man.jpg", "prompt": "A man"}

...

data:

target: utils.data_module_allinone.DataModuleFromConfigJson

params:

batch_size: 3 # batch size per GPU

wrap: true

train_resol: 256

json_path: configs/example.jsonl

2. Text-to-Image Training Data

Example JSONL Format:

{"path": "test/man.jpg", "prompt": "A man"}

{"path": "test/man.jpg", "prompt": "A man"}

...

🚀 Training

SVG-T2I training is divided into two distinct stages.

Stage 1: Train SVG Autoencoder

Navigate to the autoencoder directory and launch training:

cd autoencoder

bash run_train.sh <GPU NUM> configs/pure/svg_autoencoder_P_dd_M_IN_stage1_bs64_256_gpu1_forTest

# example

bash run_train.sh 1 configs/pure/svg_autoencoder_P_dd_M_IN_stage1_bs64_256_gpu1_forTest

- Output: Results will be saved in

autoencoder/logs. - Note: You can modify training hyperparameters and output paths directly inside

run_train.shor the configuration YAML file.

Stage 2: Train SVG-DiT (Diffusion)

Navigate to svg_t2i. We provide scripts for both single-node and multi-node training.

Single Node Example:

cd svg_t2i

bash scripts/run_train_1gpus_forTest.sh <RANK ID>

# example

bash scripts/run_train_1gpus_forTest.sh 0

Multi-Node Example:

bash scripts/run_train_mnodes.sh 0

bash scripts/run_train_mnodes.sh 1

bash scripts/run_train_mnodes.sh 2

bash scripts/run_train_mnodes.sh 3

- Output: Results will be saved in

svg_t2i/results. - Note: You can adjust learning rates, batch sizes, number of GPUs, and save directories directly in the training scripts.

🎨 Inference & Image Generation

Generate images using a pretrained SVG-DiT model.

After downloading the pretrained checkpoints, you will obtain a

pre-trained/directory.

Please place this directory under thesvg_t2i/folder before running inference.

cd svg_t2i

bash scripts/sample.sh

- Output: Results will be saved in

svg_t2i/samples. - Note: You can modify sampling parameters, prompt settings, and output directories directly inside

sample.sh.

📝 Citation

If you find this work helpful, please cite our papers:

@misc{svgt2i2025,

title={SVG-T2I: Scaling Up Text-to-Image Latent Diffusion Model Without Variational Autoencoder},

author={Minglei Shi and Haolin Wang and Borui Zhang and Wenzhao Zheng and Bohan Zeng and Ziyang Yuan and Xiaoshi Wu and Yuanxing Zhang and Huan Yang and Xintao Wang and Pengfei Wan and Kun Gai and Jie Zhou and Jiwen Lu},

year={2025},

eprint={2512.11749},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2512.11749},

}

@misc{svg2025,

title={Latent Diffusion Model without Variational Autoencoder},

author={Minglei Shi and Haolin Wang and Wenzhao Zheng and Ziyang Yuan and Xiaoshi Wu and Xintao Wang and Pengfei Wan and Jie Zhou and Jiwen Lu},

year={2025},

eprint={2510.15301},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2510.15301},

}

💡 Acknowledgments

SVG-T2I builds upon the giants of the open-source community:

- SVG: Base pipeline and core idea

- Lumina-Image-2.0: DiT architecture and base training code.

- DINOv3: State-of-the-art semantic representation encoder.

For any questions, please open a GitHub Issue.

- Downloads last month

- 155